illphated

Can You Run HiDream-I1-Dev on an M1 Max MacBook?

Short answer: Nope. And here’s why.

The HiDream-I1-Dev model from Hugging Face has been making waves for its cutting-edge AI image generation capabilities. But if you’re packing an Apple Silicon MacBook—specifically an M1 Max—you’re probably wondering if you can run it locally.

Let’s cut through the hype and get to the facts.

Why HiDream-I1-Dev Is a No-Go on M1 Max

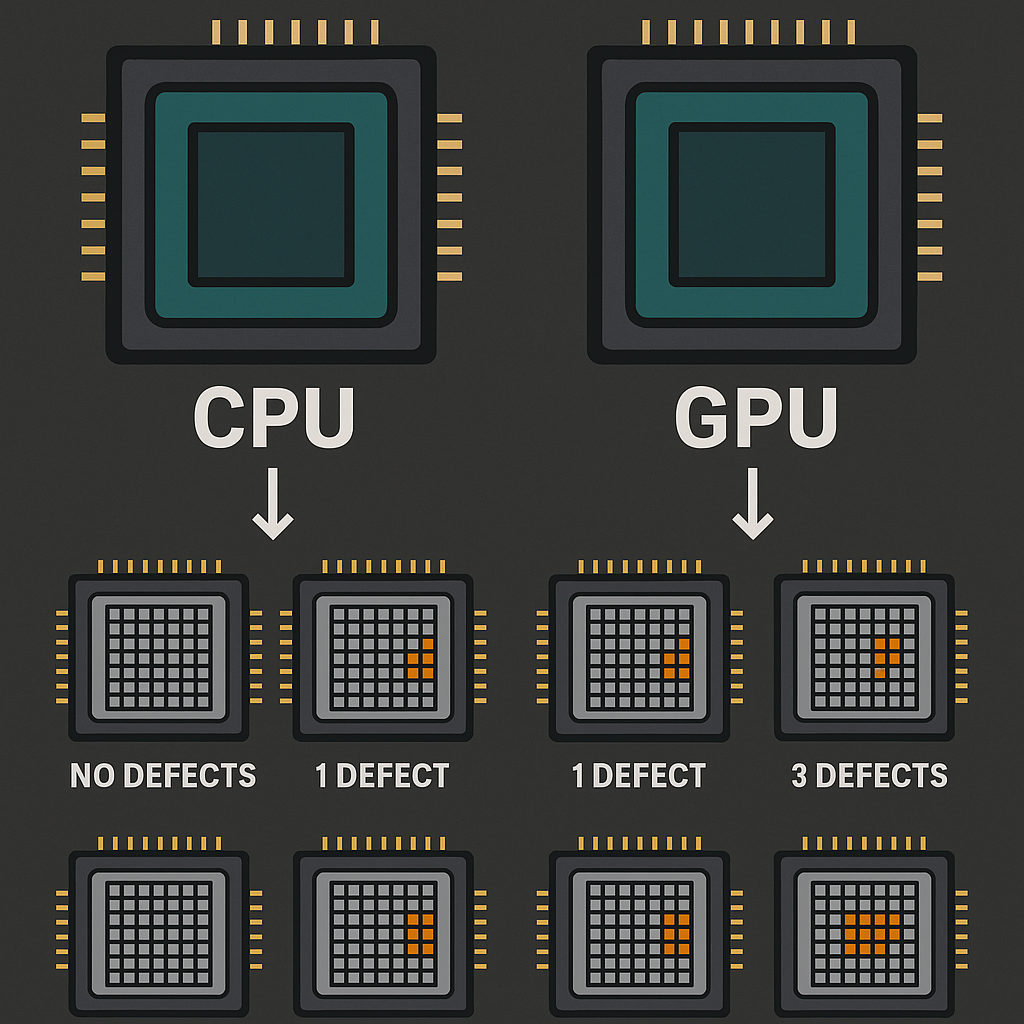

1. GPU Architecture

HiDream-I1-Dev is designed to run on NVIDIA Ampere-or-newer GPUs—think A100, RTX 3090, or 4090. The M1 Max’s integrated Apple GPU is a completely different beast and does not support the CUDA or Flash Attention features the model depends on.

2. VRAM Requirements

The “Dev” variant technically needs at least 16 GB of dedicated GPU VRAM, but real-world use often pushes past 25 GB. The M1 Max shares system memory between CPU and GPU, and while it can have 32–64 GB total RAM, it can’t deliver the raw dedicated VRAM performance the model expects.

3. Mac Compatibility Issues

Even if you try to brute-force it, reports from the community say ComfyUI on Mac produces nothing but blank images when attempting to run HiDream. The core libraries simply aren’t optimized for Apple Silicon.

The Cold, Hard Comparison

Requirement HiDream-I1-Dev Needs M1 Max MacBook (Apple Silicon)

GPU Type NVIDIA Ampere+ Apple GPU (non-CUDA, non-NVIDIA)

GPU VRAM ≥ 16 GB Shared system memory, not true VRAM

Flash Attention / CUDA Required Not supported

Known Mac Support None (black output) Major compatibility issues

So What’s the Verdict?

Unless Apple suddenly decides to bolt a 4090 into your MacBook, the HiDream-I1-Dev model isn’t running locally on your M1 Max anytime soon. The CUDA/FlashAttention requirements, VRAM demands, and architecture mismatch make it a technical dead end for now.

Workarounds & Alternatives

If you’re determined to try HiDream without switching to a PC:

Use Hugging Face Spaces — Run it in the browser using their cloud GPUs.

Rent a Remote GPU Server — Services like RunPod, Vast.ai, or AWS with NVIDIA GPUs can handle it.

Wait for Apple Silicon Support — If developers port it, M1/M2-native execution might become possible.

Until then, your M1 Max can still handle plenty of other AI models—but HiDream-I1-Dev will have to stay in the cloud.

EmailURL

h62g4g

2jj3o1

ut6l8t

i7rmb6

bn0v3y

kd2i6y

bedor5